This the multi-page printable view of this section. Click here to print.

Documentation

1 - Overview

What is KubeAdmiral

KubeAdmiral is a powerful multi-Kubernetes management engine that is compatible with Kubernetes native APIs, provides rich scheduling policies and an extensible scheduling framework that can help you easily deploy and manage cloud native applications in multi-cloud/hybrid cloud/edge environments without any modification to the application.

Key features

Unified Management of Multi-Clusters

- Support for managing Kubernetes clusters from public cloud providers such as Volcano Engine, Alibaba Cloud, Huawei Cloud, Tencent Cloud, and others.

- Support for managing Kubernetes clusters from private cloud providers.

- Support for managing user-built Kubernetes clusters.

Multi-Cluster Application Propagation

- Compatibility with various types of applications

- Native Kubernetes resources such as Deployments, StatefulSets, ConfigMaps, etc.

- Custom Resource Definitions (CRDs) with support for collecting custom status fields and enabling replica mode scheduling.

- Helm Charts.

- Cross-cluster scheduling modes

- Duplicate mode for multi-cluster application propagation.

- Static weight mode for replica propagation.

- Dynamic weight mode for replica propagation.

- Cluster selection strategies

- Specifying specific member clusters.

- All member clusters.

- Cluster selection based on labels.

- Automatic propagation of dependencies with follower scheduling

- Built-in follow resources, such as workloads referencing ConfigMaps, Secrets, etc.

- Specifying follow resources, where workloads can specify follow resources in labels, such as Services, Ingress, etc.

- Rescheduling strategy configuration

- Support for enabling/disabling rescheduling.

- Support configuring rescheduling trigger conditions.

- Seamless takeover of existing single-cluster resources

- Overriding member cluster resource configurations with override policy

- Compatibility with various types of applications

Failover

- Manually evict applications in case of cluster failures

- Automatic migration of applications across clusters in case of application failures

- Automatic migration of application replicas when they cannot be scheduled.

- Automatic migration of faulty replicas when they recover.

Architecture

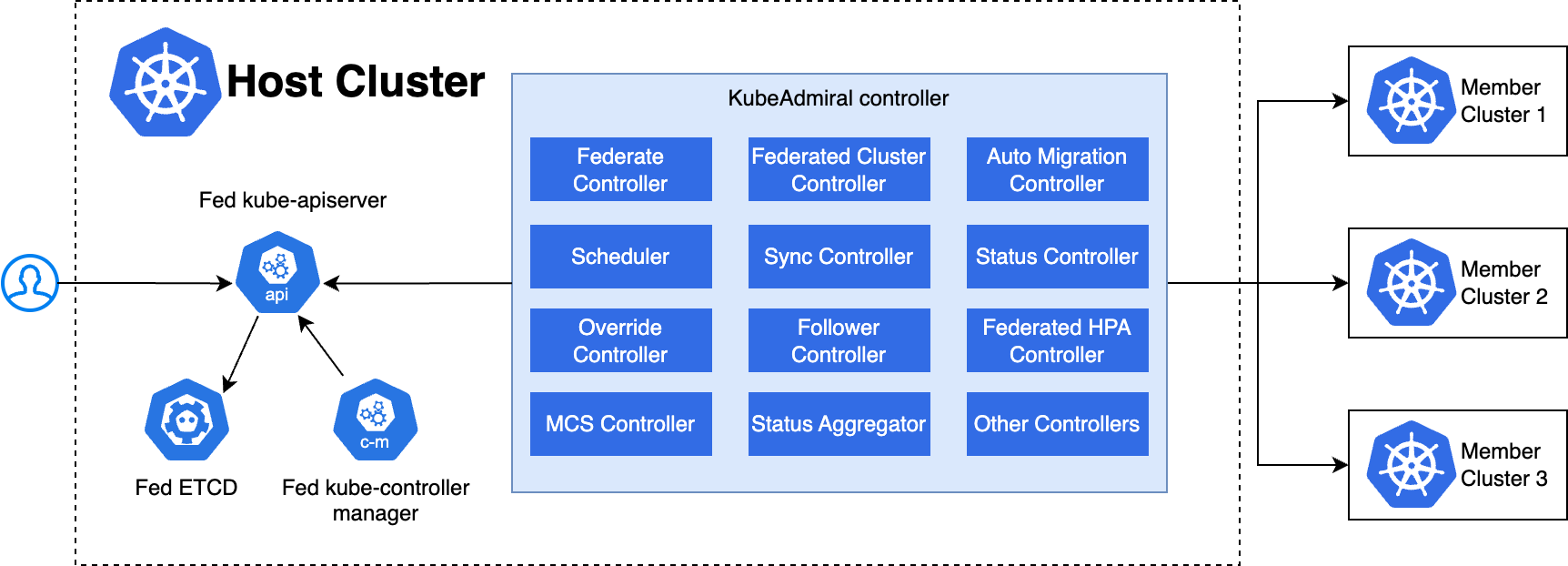

The overall architecture of KubeAdmiral is shown as below:

The KubeAdmiral control plane runs in the host cluster and consists of the following components:

- Fed ETCD: Stores the KubeAdmiral API objects and federated Kubernetes objects managed by KubeAdmiral.

- Fed Kube Apiserver: A native Kubernetes API Server, the operation entry for federated Kubernetes objects.

- Fed Kube Controller Manager: A native Kubernetes controller, selectively enables specific controllers as needed, such as the namespace controller and garbage collector (GC) controller.

- KubeAdmiral Controller: Provides the core control logic of the entire system. For example, member cluster management, resource scheduling and propagation, fault migration, status aggregation, etc.

The KubeAdmiral Controller consists of a scheduler and various controllers that perform core functionalities. Below are several key components:

- Federated Cluster Controller: Watches the FederatedCluster object and manages the lifecycle of member clusters. Including adding, removing, and collecting status of member clusters.

- Federate Controller: Watches the Kubernetes resources and creates FederatedObject objects for each individual resource.

- Scheduler:Responsible for scheduling resources to member clusters. In the replica scheduling scenario, it will calculate the replicas that each cluster deserves based on various factors.

- Sync Controller: Watches the FederatedObject object and is responsible for distributing federated resources to each member cluster.

- Status Controller: Responsible for collecting the status of resources deployed in member clusters. It retrieves and aggregates the status information of federated resources from each member cluster.

Concepts

Cluster concepts

Federation Control Plane(Federation/KubeAdmiral Cluster)

The Federation Control Plane (KubeAdmiral control plane) consists of core components of KubeAdmiral, providing a consistent Kubernetes API to the outside world. Users can use the Federation Control Plane for multi-cluster management, application scheduling and propagation, and other operations.

Member Clusters

Kubernetes clusters that have been joined to the Federation Control Plane. Different types of Kubernetes clusters can be joined to the Federation Control Plane to become member clusters, supporting the response to the propagation policy of the Federation Control Plane and completing application propagation.

Core concepts

Federated Namespace

The namespace in the Federation Control Plane refers to the Federated namespace. When a namespace is created in the Federation Control Plane, the system automatically creates the same Kubernetes namespace in all member clusters. In addition, the Federated Namespace has the same meaning and function as the namespace in Kubernetes, and can be used to achieve logical isolation of user resources and fine-grained user resource management.

PropogationPolicy/ClusterPropagationPolicy

KubeAdmiral defines the policy of multi-cluster resources propagation through PropogationPolicy/ClusterPropagationPolicy. According to the policies, multiple replicas of application instances can be distributed and deployed to specified member clusters. When a single cluster fails, application replicas can be flexibly scheduled to other clusters to ensure high availability of the business. Currently supported scheduling modes include replica duplicated or divided scheduling.

OverridePolicy/ClusterOverridePolicy

The OverridePolicy/ClusterOverridePolicy is used to define different configurations for the propagation of the same resource in different member clusters. It uses the JsonPatch override syntax for configuration and supports three override operations: add, remove, and replace.

Resource Propagation

Within a federation cluster, various native Kubernetes resources are distributed and scheduled in multiple clusters, which is the core capability of KubeAdmiral:

- It provides replica scheduling modes such as duplicated propagation, dynamic weight propagation, and static weight propagation.

- It supports strategies such as automatically deploying associated resources of applications to corresponding member clusters, automatically migrating applications to other clusters after failures, and automatically taking over conflicting resources of member clusters which flexibly meet the resource scheduling requirements in multi-cluster scenarios.

2 - Getting Started

2.1 - Installation

Local installation

Prerequisites

Make sure the following tools are installed in the environment before installing KubeAdmiral:

Installation steps

If you want to understand how KubeAdmiral works, you can easily start a cluster with KubeAdmiral control plane on your local computer.

1.Clone the KubeAdmiral repository to your local environment:

$ git clone https://github.com/kubewharf/kubeadmiral

2.Switch to the KubeAdmiral directory:

$ cd kubeadmiral

3.Install and start KubeAdmiral:

$ make local-up

The command performs the following tasks mainly:

- Use the Kind tool to start a meta-cluster;

- Install the KubeAdmiral control plane components on the meta-cluster;

- Use the Kind tool to start 3 member clusters;

- Bootstrap the joining of the 3 member clusters to the federated control plane;

- Export the cluster kubeconfigs to the $HOME/.kube/kubeadmiral directory.

If all the previous steps went successfully, we would see the following message:

Your local KubeAdmiral has been deployed successfully!

To start using your KubeAdmiral, run:

export KUBECONFIG=$HOME/.kube/kubeadmiral/kubeadmiral.config

To observe the status of KubeAdmiral control-plane components, run:

export KUBECONFIG=$HOME/.kube/kubeadmiral/meta.config

To inspect your member clusters, run one of the following:

export KUBECONFIG=$HOME/.kube/kubeadmiral/member-1.config

export KUBECONFIG=$HOME/.kube/kubeadmiral/member-2.config

export KUBECONFIG=$HOME/.kube/kubeadmiral/member-3.config

4.Wait for all member clusters to join KubeAdmiral

After the member clusters have successfully joined KubeAdmiral, we can observe the detailed status of the member clusters through the control plane: three member clusters have joined KubeAdmiral, and their statuses are all READY and JOINED.

$ kubectl get fcluster

NAME READY JOINED AGE

kubeadmiral-member-1 True True 1m

kubeadmiral-member-2 True True 1m

kubeadmiral-member-3 True True 1m

Installation KubeAdmiral by Helm Chart

Prerequisites

Make sure the following tools are installed in the environment before installing KubeAdmiral:

Installation steps

If you already have a Kubernetes cluster, you can install the KubeAdmiral control plane on your cluster using the helm chart. To install KubeAdmiral, follow these steps:

1.Get the Chart package for KubeAdmiral and install it:

Get the Chart package locally and install it.

$ git clone https://github.com/kubewharf/kubeadmiral

$ cd kubeadmiral

$ helm install kubeadmiral -n kubeadmiral-system --create-namespace --dependency-update ./charts/kubeadmiral

2.Wait and check if the package has been installed successfully

Use your Kubernetes cluster kubeconfig to see if the following components of KubeAdmiral have been successfully running:

$ kubectl get pods -n kubeadmiral-system

NAME READY STATUS RESTARTS AGE

etcd-0 1/1 Running 0 13h

kubeadmiral-apiserver-5767cd4f56-gvnqq 1/1 Running 0 13h

kubeadmiral-controller-manager-5f598574c9-zjmf9 1/1 Running 0 13h

kubeadmiral-hpa-aggregator-59ccd7b484-phbr6 2/2 Running 0 13h

kubeadmiral-kube-controller-manager-6bd7dcf67-2zpqw 1/1 Running 2 (13h ago) 13h

3.Export the kubeconfig of KubeAdmiral

After executing the following command, the kubeconfig for connecting to KubeAdmiral will be exported to the kubeadmiral-kubeconfig file.

Note that the address in the kubeconfig is set to the internal service address of KubeAdmiral-apiserver:

$ kubectl get secret -n kubeadmiral-system kubeadmiral-kubeconfig-secret -o jsonpath={.data.kubeconfig} | base64 -d > kubeadmiral-kubeconfig

If you specified an external address when installing KubeAdmiral, we will automatically generate a kubeconfig using the external address. You can export it to the external-kubeadmiral-kubeconfig file by running the following command:

$ kubectl get secret -n kubeadmiral-system kubeadmiral-kubeconfig-secret -o jsonpath={.data.external-kubeconfig} | base64 -d > external-kubeadmiral-kubeconfig

Uninstallation steps

Uninstall the KubeAdmiral Helm chart in the kubeadmiral-system namespace:

$ helm uninstall -n kubeadmiral-system kubeadmiral

This command will delete all Kubernetes resources associated with the Chart:

Note: The following permissions and namespace resources are relied on when installing and uninstalling helmchart, so they cannot be deleted automatically and require you to clean them up manually.

$ kubectl delete clusterrole kubeadmiral-pre-install-job

$ kubectl delete clusterrolebinding kubeadmiral-pre-install-job

$ kubectl delete ns kubeadmiral-system

Configuration parameters

| Name | Description | Default Value |

|---|---|---|

| clusterDomain | Default cluster domain of Kubernetes cluster | “cluster.local” |

| etcd.image.name | Image name used by KubeAdmiral etcd | “registry.k8s.io/etcd:3.4.13-0” |

| etcd.image.pullPolicy | Pull mode of etcd image | “IfNotPresent” |

| etcd.certHosts | Hosts accessible with etcd certificate | [“kubernetes.default.svc”, “.etcd.{{ .Release.Namespace }}.svc.{{ .Values.clusterDomain }}”, “.{{ .Release.Namespace }}.svc.{{ .Values.clusterDomain }}”, “.{{ .Release.Namespace }}.svc”, “localhost”, “127.0.0.1”] |

| apiServer.image.name | Image name of kubeadmiral-apiserver | “registry.k8s.io/kube-apiserver:v1.20.15” |

| apiServer.image.pullPolicy | Pull mode of kubeadmiral-apiserver image | “IfNotPresent” |

| apiServer.certHosts | Hosts supported by kubeadmiral-apiserver certificate | [“kubernetes.default.svc”, “.etcd.{{ .Release.Namespace }}.svc.{{ .Values.clusterDomain }}”, “.{{ .Release.Namespace }}.svc.{{ .Values.clusterDomain }}”, “.{{ .Release.Namespace }}.svc”, “localhost”, “127.0.0.1”] |

| apiServer.hostNetwork | Deploy kubeadmiral-apiserver with hostNetwork. If there are multiple kubeadmirals in one cluster, you’d better set it to “false” | “false” |

| apiServer.serviceType | Service type of kubeadmiral-apiserver | “ClusterIP” |

| apiServer.externalIP | Exposed IP of kubeadmiral-apiserver. If you want to expose the apiserver to the outside, you can set this field, which will write the external IP into the certificate and generate a kubeconfig with the external IP. | "" |

| apiServer.nodePort | Node port used for the ‘apiserver’. This will take effect when ‘apiServer.serviceType’ is set to ‘NodePort’. If no port is specified, a node port will be automatically assigned. | 0 |

| apiServer.certHosts | Hosts supported by the kubeadmiral-apiserver certificate | [“kubernetes.default.svc”, “.etcd.{{ .Release.Namespace }}.svc.{{ .Values.clusterDomain }}”, “.{{ .Release.Namespace }}.svc.{{ .Values.clusterDomain }}”, “.{{ .Release.Namespace }}.svc”, “localhost”, “127.0.0.1”, “{{ .Values.apiServer.externalIP }}”] |

| kubeControllerManager.image.name | Image name of kube-controller-manager | “registry.k8s.io/kube-controller-manager:v1.20.15” |

| kubeControllerManager.image.pullPolicy | Pull mode of kube-controller-manager image | “IfNotPresent” |

| kubeControllerManager.controllers | Controllers that kube-controller-manager component needs to start | “namespace,garbagecollector” |

| kubeadmiralControllerManager.image.name | Image name of kubeadmiral-controller-manager | “docker.io/kubewharf/kubeadmiral-controller-manager:v1.0.0” |

| kubeadmiralControllerManager.image.pullPolicy | Pull mode of kubeadmiral-controller-manager image | “IfNotPresent” |

| kubeadmiralControllerManager.extraCommandArgs | Additional startup parameters of kubeadmiral-controller-manager | {} |

| kubeadmiralHpaAggregator.image.name | Image name of kubeadmiral-hpa-aggregator | “docker.io/kubewharf/kubeadmiral-hpa-aggregator:v1.0.0” |

| kubeadmiralHpaAggregator.image.pullPolicy | Pull mode of kubeadmiral-hpa-aggregator image | “IfNotPresent” |

| kubeadmiralHpaAggregator.extraCommandArgs | Additional startup parameters of kubeadmiral-hpa-aggregator | {} |

| installTools.cfssl.image.name | cfssl image name for KubeAdmiral installer | “docker.io/cfssl/cfssl:latest” |

| installTools.cfssl.image.pullPolicy | cfssl image pull policy | “IfNotPresent” |

| installTools.kubectl.image.name | kubectl image name for KubeAdmiral installer | “docker.io/bitnami/kubectl:1.22.10” |

| installTools.kubectl.image.pullPolicy | kubectl image pull policy | “IfNotPresent” |

2.2 - Quick start

Prerequisites

- Kubectl version v0.20.15+

- KubeAdmiral cluster

Propagating deployment resources with KubeAdmiral

The most common use case for KubeAdmiral is to manage Kubernetes resources across multiple clusters with a single unified API. This section shows you how to propagate Deployments to multiple member clusters and view their respective statuses using KubeAdmiral.

1.Make sure you are currently using the kubeconfig for the KubeAdmiral control plane.

$ export KUBECONFIG=$HOME/.kube/kubeadmiral/kubeadmiral.config

2.Create a deployment object in KubeAdmiral

$ kubectl create -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-server

labels:

app: echo-server

spec:

replicas: 6

selector:

matchLabels:

app: echo-server

template:

metadata:

labels:

app: echo-server

spec:

containers:

- name: server

image: ealen/echo-server:latest

ports:

- containerPort: 8080

protocol: TCP

name: echo-server

EOF

3.Create a new PropagationPolicy in KubeAdmiral

The following propagation policy will default to propagating bound resources to all clusters:

$ kubectl create -f - <<EOF

apiVersion: core.kubeadmiral.io/v1alpha1

kind: PropagationPolicy

metadata:

name: policy-all-clusters

spec:

schedulingMode: Divide

clusterSelector: {}

EOF

4.Bind the created deployment object to the PropagationPolicy

Bind the specific PropagationPolicy by labeling the deployment object.

$ kubectl label deployment echo-server kubeadmiral.io/propagation-policy-name=policy-all-clusters

5.Wait for the deployment object to be propagated to all member clusters

If the KubeAdmiral control plane is working properly, the deployment object will be quickly propagated to all member clusters. We can observe the brief propagation situation by looking at the number of ready replicas of the control plane deployment:

$ kubectl get deploy echo-server

NAME READY UP-TO-DATE AVAILABLE AGE

echo-server 6/6 6 6 10m

Meanwhile, we can also view the specific status of the propagated resources in each member cluster through the CollectedStatus object:

$ kubectl get collectedstatuses echo-server-deployments.apps -oyaml

apiVersion: core.kubeadmiral.io/v1alpha1

kind: CollectedStatus

metadata:

name: echo-server-deployments.apps

namespace: default

clusterStatus:

- clusterName: kubeadmiral-member-1

collectedFields:

metadata:

creationTimestamp: "2023-03-14T08:02:05Z"

spec:

replicas: 2

status:

availableReplicas: 2

conditions:

- lastTransitionTime: "2023-03-14T08:02:10Z"

lastUpdateTime: "2023-03-14T08:02:10Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2023-03-14T08:02:05Z"

lastUpdateTime: "2023-03-14T08:02:10Z"

message: ReplicaSet "echo-server-65dcc57996" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 1

readyReplicas: 2

replicas: 2

updatedReplicas: 2

- clusterName: kubeadmiral-member-2

collectedFields:

metadata:

creationTimestamp: "2023-03-14T08:02:05Z"

spec:

replicas: 2

status:

availableReplicas: 2

conditions:

- lastTransitionTime: "2023-03-14T08:02:09Z"

lastUpdateTime: "2023-03-14T08:02:09Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2023-03-14T08:02:05Z"

lastUpdateTime: "2023-03-14T08:02:09Z"

message: ReplicaSet "echo-server-65dcc57996" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 1

readyReplicas: 2

replicas: 2

updatedReplicas: 2

- clusterName: kubeadmiral-member-3

collectedFields:

metadata:

creationTimestamp: "2023-03-14T08:02:05Z"

spec:

replicas: 2

status:

availableReplicas: 2

conditions:

- lastTransitionTime: "2023-03-14T08:02:13Z"

lastUpdateTime: "2023-03-14T08:02:13Z"

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

- lastTransitionTime: "2023-03-14T08:02:05Z"

lastUpdateTime: "2023-03-14T08:02:13Z"

message: ReplicaSet "echo-server-65dcc57996" has successfully progressed.

reason: NewReplicaSetAvailable

status: "True"

type: Progressing

observedGeneration: 1

readyReplicas: 2

replicas: 2

updatedReplicas: 2

3 - User Guide

3.1 - Member clusters management

Prerequisites

- Kubectl v0.20.15+

- KubeAdmiral cluster

- Client cert, client key and CA information of the new member cluster

Associate member clusters

1.Get the kubeconfig of the member cluster

Replace KUBEADMIRAL_CLUSTER_KUBECONFIG with the kubeconfig path to connect to the KubeAdmiral cluster.

$ export KUBECONFIG=KUBEADMIRAL_CLUSTER_KUBECONFIG

2.Encode the client certificate, client key, and CA for the member cluster

Replace NEW_CLUSTER_CA, NEW_CLUSTER_CERT, and NEW_CLUSTER_KEY with the CA(certificate authority), client certificate, and client key of the new member cluster, respectively (you can obtain these from the kubeconfig of the member cluster).

$ export ca_data=$(base64 NEW_CLUSTER_CA)

$ export cert_data=$(base64 NEW_CLUSTER_CERT)

$ export key_data=$(base64 NEW_CLUSTER_KEY)

3.Create Secret in KubeAdmiral for storing connection information for the member cluster

Replace CLUSTER_NAME with the name of the new member cluster:

$ kubectl apply -f - << EOF

apiVersion: v1

kind: Secret

metadata:

name: CLUSTER_NAME

namespace: kube-admiral-system

data:

certificate-authority-data: $ca_data

client-certificate-data: $cert_data

client-key-data: $key_data

EOF

4.Create a FederatedCluster object for the member cluster in KubeAdmiral

Replace CLUSTER_NAME and CLUSTER_ENDPOINT with the name and address of the member cluster:

$ kubectl apply -f - << EOF

apiVersion: core.kubeadmiral.io/v1alpha1

kind: FederatedCluster

metadata:

name: $CLUSTER_NAME

spec:

apiEndpoint: $CLUSTER_ENDPOINT

secretRef:

name: $CLUSTER_NAME

useServiceAccount: true

EOF

5.View member cluster status

If both the KubeAdmiral control plane and the member cluster are working properly, the association process should complete quickly and you should see the following:

$ kubectl get federatedclusters

NAME READY JOINED AGE

...

CLUSTER_NAME True True 1m

...

Note: The status of successfully associated member clusters should be READY and JOINED.

Dissociate member clusters

6.Delete the FederatedCluster object corresponding to the member cluster in KubeAdmiral

Replace CLUSTER_NAME with the name of the member cluster to be deleted.

$ kubectl delete federatedcluster CLUSTER_NAME

3.2 - Propagation Policy

Overview

Kubeadmiral defines the propagation policy of multi-cluster applications in the federated cluster through PropagationPolicy/ClusterPropagationPolicy. Multiple replicas of the application can be deployed to the specified member clusters according to the propagation policy. When a member cluster fails, the replicas can be flexibly scheduled to other clusters to ensure the high availability of the business.

Currently supported scheduling modes include Duplicated and Divide. Among them, schedulingMode Divide can be divided into Dynamic Weight and Static Weight.

Policy type

Propagation policies can be divided into two categories according to the effective scope.

- Namespace-scope(PropagationPolicy): It indicates that the policy takes effect within the specified namespace.

- Cluster-scope(ClusterPropagationPolicy): It indicates that the policy takes effect in all namespaces within the cluster.

Target cluster selection

PropagationPolicy provides multiple semantics to help users select the appropriate target cluster, including placement, clusterSelector, clusterAffinity, tolerations, and maxClusters.

Placement

Users can configure Placement to make the propagation policy only take effect in the specified member cluster, and resources are only scheduled in the specified member cluster.

A typical scenario is to select multiple member clusters as deployment clusters to meet the requirements of high availability. At the same time, Placement provides the Preference parameter to allow the configuration of the cluster, weight, and number of replicas for resource propagation, which is suitable for the scenario of multi-cluster propagation.

- cluster: The cluster specified in the resource propagation, selected from the existing member clusters.

- weight: The relative weight in static weight propagation, with a value range of 1 to 100. The larger the number, the higher the relative weight, and the actual relative weight takes effect according to the member cluster configuration. For example, if the weights of the two selected deployment clusters are 1 (or 100), the static weights are each 50%.

- minReplicas: The minimum number of replicas of the current cluster.

- maxReplicas: The maximum number of replicas of the current cluster.

placement:

- cluster: member1

- cluster: member2

The propagation policy configured above will propagate resources to the two clusters, member1 and member2. The advanced usage of placement will be detailed in the sections in the following.

ClusterSelector

Users can use cluster labels to match clusters. Propagation policies take effect in member clusters that match clusterSelector labels, and resources are scheduled in member clusters that match clusterSelector labels.

If multiple labels are configured at the same time, the effective rules are as follows:

- If using clusterAffinity type cluster labels, member clusters only need to meet any one of the following “conditions”, and all labels in each “condition” must match at the same time.

- If both clusterSelector and clusterAffinity cluster labels are used at the same time, the results between the two labels will take the intersection.

- Both clusterSelector and clusterAffinity are empty, indicating that all clusters are matched.

clusterSelector:

region: beijing

az: zone1

The propagation policy configured above will propagate resources to the clusters with the two labels of “region: beijing” and “az: zone1”.

ClusterAffinity

The selector label configured in the mandatory scheduling condition is used to match the cluster. The propagation policy takes effect in the member clusters that match the clusterAffinity label, and resources are only scheduled in the member clusters that match the clusterAffinity label.

If multiple labels are configured at the same time, the effective rules are as follows:

- If using clusterAffinity type cluster labels, member clusters only need to meet any one of the following “conditions”, and all labels in each “condition” must match at the same time.

- If both clusterSelector and clusterAffinity cluster labels are used at the same time, the results between the two labels will take the intersection.

- Both clusterSelector and clusterAffinity are empty, indicating that all clusters are matched.

clusterAffinity:

matchExpressions:

- key: region

operator: In

values:

- beijing

- key: provider

operator: In

values:

- volcengine

The propagation policy configured above will propagate resources to the clusters with the two labels of “region: beijing” and “provider: volcengine”.

Tolerations

The cluster taint scheduling can be configured to configure taint tolerance as needed, and perform cluster scheduling according to the selected multiple taints.

tolerations:

- effect: NoSchedule

key: dedicated

operator: Equal

value: groupName

- effect: NoExecute

key: special

operator: Exists

Generally speaking, the scheduler will default to filter out clusters with the taints of NoSchedule and NoExecute, while the propagation policy configured above can tolerate specific taints on the cluster.

MaxClusters

The maximum number of clusters for replica scheduling can be used to configure the upper limit of the replica number of member clusters to which resources can be scheduled. The value range is a positive integer. In a single cluster propagation scenario, the maximum number of clusters can be configured to 1. For example: for task scheduling, if the maximum number of clusters is set to 1, the task will select a cluster with the best resources from multiple optional member clusters for scheduling and execution.

Duplicated scheduling mode

Duplicate Scheduling mode, which means that exactly the same number of replicas are propagated in multiple member clusters.

schedulingMode: Duplicate

placement:

- cluster: member1

- cluster: member2

preferences:

minReplicas: 3

maxReplicas: 3

- cluster: member3

preferences:

minReplicas: 1

maxReplicas: 3

The propagation policy configured above will deploy resources in the clusters of member1, member2, and member3. Member1 will use the replica number defined in the resource template, member2 will deploy 3 replicas, and member3 will deploy 1-3 replicas depending on the situation.

Divided scheduling mode - dynamic weight

Dynamic weight scheduling strategy means that when scheduling resources, the controller will dynamically calculate the current available resources of each member cluster according to the preset dynamic weight scheduling algorithm, and dynamically propagate the replicas to multiple member clusters according to the expected total number, so as to achieve the purpose of automatically balancing resources between member clusters.

For example: if users need to propagate resources (target 5 replicas) by weight to the member clusters Cluster A and Cluster B. At this time, kubeadmiral will propagate different numbers of replicas to the member clusters according to the weight of the cluster. If the dynamic cluster weight is selected, it will be propagated according to the weight calculated by the system, and the actual number of replicas propagated to each member cluster depends on the total amount of cluster resources and the remaining resources.

schedulingMode: Divide

placement:

- cluster: member1

- cluster: member2

The propagation policy configured above will propagate the replicas to the two clusters, member1 and member2, according to the dynamic weight scheduling strategy.

Divided scheduling mode - static weight

Static weight scheduling strategy refers to the situation where the controller propagates replicas to multiple member clusters based on the weights manually configured by the user during resource scheduling. The range of static cluster weights is 1-100, and the larger the number, the higher the relative weight. The actual relative weight configured by the effective member cluster takes effect.

For example, users need to propagate resources (target 5 replicas) to member clusters Cluster A and Cluster B according to weight. At this time, kubeadmiral will propagate different number of replicas to member clusters according to the weight of the cluster. If static weight scheduling is selected and the weight is configured as Cluster A (30%): Cluster B (20%) = 3:2, then Cluster A will be propagated to 3 replicas and Cluster B will be propagated to 2 replicas.

schedulingMode: Divide

placement:

- cluster: member1

preferences:

weight: 40

- cluster: member2

preferences:

weight: 60

The propagation policy of the above configuration will propagate replicas to two clusters, member1 and member2, according to the static weight (40:60) configured by the user. For example, if the number of replicas of the resource object is 10, member1 will be propagated to 4 replicas, and member2 will be propagated to 6 replicas.

Rescheduling

Kubeadmiral allows users to configure rescheduling behavior by configuring propagation policies. The options related to rescheduling are as follows:

DisableRescheduling: The overall switch of the rescheduling. If turned on, after resources are propagated to member clusters, it will trigger replica rescheduling according to the configured rescheduling conditions. If turned off, resource rescheduling will not be triggered due to resource modifications, policy changes, and other reasons after resources are propagated to member clusters.

RescheduleWhen: Under the rescheduling mechanism, users can specify the conditions that trigger rescheduling. When the condition occurs, it will automatically trigger the rescheduling of resources according to the latest policy configuration and cluster environment. Resource template changes are the default configuration of the system and cannot be cancelled. Only changes in the request and replica fields will trigger rescheduling, and changes in other fields will only synchronize and update the configuration to the replicas in the propagated member cluster. In addition to resource template changes, Kubeadmiral provides the following optional trigger conditions.

- policyContentChanged: When the propagation policy scheduling semantics change, the scheduler will trigger rescheduling. The policy scheduling semantics do not include the label, annotation, and autoMigration options. This trigger condition is enabled by default.

- clusterJoined: When a new member cluster is added, the scheduler will trigger rescheduling. This trigger condition is disabled by default.

- clusterLabelsChanged: When the member cluster label is changed, the scheduler will trigger rescheduling. This trigger condition is off by default.

- clusterAPIResourcesChanged: When the member cluster API Resource changes, the scheduler will trigger rescheduling. This trigger condition is off by default.

ReplicaRescheduling: The behavior of replicas propagation during rescheduling. Currently, only one option, avoidDisruption, is provided, which is enabled by default. When replicas are reallocated due to rescheduling, it will not affect the currently scheduled replicas.

When users do not explicitly configure the rescheduling option, the default behavior is as follows:

reschedulePolicy:

disableRescheduling: false

rescheduleWhen:

policyContentChanged: true

clusterJoined: false

clusterLabelsChanged: false

clusterAPIResourcesChanged: false

replicaRescheduling:

avoidDisruption: true

3.3 - Automatic propagation of associated resources

What are associated resources

The workloads (such as Deployments, StatefulSets, etc.) in Kubernetes usually rely on many other resources, such as ConfigMaps, Secrets, PVCs, etc.

Therefore, it is necessary to ensure that when the workload is propagated to the target member cluster, the associated resources are synchronously distributed to the same member cluster.

The ways of associating workloads and other resources mainly include the following two types:

Built-in follower resources: Refer to resources that are associated with each other in the Yaml configuration file. For example, workloads (Deployment, StatefulSet, etc.) and ConfigMap, Secret, PVC, etc. When resources are distributed, if the workload and associated resources are not distributed to the same cluster, it will cause the workload to fail to deploy due to resource missing.

Specified follower resources: Mainly refer to Service and Ingress. The absence of specified follower resources will not cause failure in workload deployment, but will affect usage. For example, when service and ingress are not distributed to the same member cluster as the workload, the workload will not be able to provide services externally.

Automatic propagation of associated resources

KubeAdmiral supports the automatic propagation of associated resources: when a workload is associated with related resources, KubeAdmiral will ensure that the workload and associated resources are scheduled to the same member cluster.

We name the automatic propagation of associated resources as follower scheduling.

Supported resource types for follower scheduling

Built-in follower resources: Directly configure the associated resources when using YAML to configure workloads.

| Association Type | Workloads | Associated Resources |

|---|---|---|

| Built-in follower resources | Deployment | ConfigMap |

| StatefulSet | Secret | |

| DaemonSet | PersistentVolumeClaim | |

| Job | ServiceAccount | |

| CronJob | ||

| Pod |

Specified follower resources: Using Annotations to declare the resources that need to be associated when creating workloads.

| Association Type | Workloads | Associated Resources |

|---|---|---|

| Specified follower resources | Deployment | ConfigMap |

| StatefulSet | Secret | |

| DaemonSet | PersistentVolumeClaim | |

| Job | ServiceAccount | |

| CronJob | Service | |

| Pod | Ingress |

How to configure follower scheduling

Built-in follower resources

KubeAdmiral will propagate the build-in follower resources automatically which does not require users to add additional configurations.

For examples:

The Deployment A mounts the ConfigMap N, and the Deployment A is specified to be propagated to Cluster1 and Cluster2.

The ConfigMap N does not specify a propagation policy, but will follow Deployment A to be propagated to Cluster1 and Cluster2.

Specified follower resources

When creating a workload, users can declare one or more associated resources using Annotations, which will be propagated to the target member clusters automatically along with the workload.

The format for specifying associated resources using Annotations is as follows:

- Annotation Key:

kubeadmiral.io/followers: - Each associated resource contains 3 fields:

group,kind, andname. They are wrapped in{}. - When there are multiple associated resources, they are separated by

,, and all resources are wrapped in[].

Different associated resources have different field configurations in the Annotation, as follows:

| kind | group | name | Anonotation |

|---|---|---|---|

| ConfigMap | "" | configmap-name | kubeadmiral.io/followers: ‘[{“group”: “”, “kind”: “ConfigMap”, “name”: “configmap-name”}]’ |

| Secret | "" | secret-name | kubeadmiral.io/followers: ‘[{“group”: “”, “kind”: “Secret”, “name”: “secret-name”}]’ |

| Service | "" | service-name | kubeadmiral.io/followers: ‘[{“group”: “”, “kind”: “Service”, “name”: “service-name”}]’ |

| PersistentVolumeClaim | "" | pvc-name | kubeadmiral.io/followers: ‘[{“group”: “”, “kind”: “PersistentVolumeClaim, “name”: “pvc-name”}]’ |

| ServiceAcount | "” | serviceacount-name | kubeadmiral.io/followers: ‘[{“group”: “”, “kind”: “ServiceAcount, “name”: “serviceacount-name”}]’ |

| Ingress | networking.k8s.io | ingress-name | kubeadmiral.io/followers: ‘[{“group”: “networking.k8s.io”, “kind”: “Ingress, “name”: “ingress-name”}]’ |

In this example, the Deployment is associated with two resources, namely Secret and Ingress.

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kubeadmiral.io/followers: '[{"group": "", "kind": "Secret", "name": "serect-demo"}, {"group": "networking.k8s.io", "kind": "Ingress", "name": "ingress-demo"}]'

name: deployment-demo

spec:

replicas: 2

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

containers:

- image: demo-repo:v1

name: demo

ports:

- containerPort: 80

Disable follower scheduling for workloads

Follower scheduling is enabled by default in KubeAdmiral.

If users want to disable follower scheduling, they need to modify the PropagationPolicy by setting the disableFollowerScheduling field to true. Here is an example:

apiVersion: core.kubeadmiral.io/v1alpha1

kind: PropagationPolicy

metadata:

name: follow-demo

namespace: default

spec:

disableFollowerScheduling: true

Disable follower scheduling for associated resources

To prevent some associated resources from follower scheduling, users add the following declaration on the Annotation of the associated resources: kubeadmiral.io/disable-following: "true"

For example:

- The Deployment A is mounted with ConfigMap N and Secret N, and the workload is specified to be propagated to Cluster1 and Cluster2.

- If the user does not want Secret N to follow the scheduling, by adding the Annotation

kubeadmiral.io/disable-following: "true"to Secret N, Secret N will not automatically be propagated to Cluster1 and Cluster2. - ConfigMap N will still follow Deployment A to be distributed to Cluster1 and Cluster2.

The YAML is as follows:

apiVersion: v1

kind: Secret

metadata:

annotations:

kubeadmiral.io/disable-following: "true"

name: follow-demo

namespace: default

data: {}

3.4 - Resource Federalization

What is Resource Federalization

Assume there is a member cluster already associated with a host cluster, and it has deployed resources (such as Deployments) that are not managed by KubeAdmiral. In such cases, we can refer to the How to Perform Resource Federalization section to directly hand over the management of those resources to KubeAdmiral without causing a restart of pods belonging to workload-type resources. This capability is provided by resource federalization.

How to perform Resource Federalization

Before you begin

Refer to the Quickstart section for a quick launch of KubeAdmiral.

Create some resources in the member cluster

- Select the member cluster kubeadmiral-member-1.

$ export KUBECONFIG=$HOME/.kube/kubeadmiral/member-1.config - Create the resource Deployment my-nginx.

$ kubectl apply -f ./my-nginx.yaml# ./my-nginx.yaml apiVersion: apps/v1 kind: Deployment metadata: name: my-nginx spec: selector: matchLabels: run: my-nginx replicas: 2 template: metadata: labels: run: my-nginx spec: containers: - name: my-nginx image: nginx ports: - containerPort: 80 - Create the resource Service my-nginx.

$ kubectl apply -f ./my-nginx-svc.yaml# ./my-nginx-svc.yaml apiVersion: v1 kind: Service metadata: name: my-nginx labels: run: my-nginx spec: ports: - port: 80 protocol: TCP selector: run: my-nginx - View the created resources.

$ kubectl get pod,deploy,svc NAME READY STATUS RESTARTS AGE pod/my-nginx-5b56ccd65f-l7dm5 1/1 Running 0 29s pod/my-nginx-5b56ccd65f-ldfp4 1/1 Running 0 29s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/my-nginx 2/2 2 2 29s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/my-nginx ClusterIP 10.96.72.40 <none> 80/TCP 25s

Create a PropagationPolicy for resource binding in the host cluster

Select the host cluster.

$ export KUBECONFIG=$HOME/.kube/kubeadmiral/kubeadmiral.configCreate the PropagationPolicy nginx-pp.

$ kubectl apply -f ./propagationPolicy.yaml# ./propagationPolicy.yaml apiVersion: core.kubeadmiral.io/v1alpha1 kind: PropagationPolicy metadata: name: nginx-pp namespace: default spec: placement: - cluster: kubeadmiral-member-1 #The member clusters participating in resource federalization are referred to as federated clusters. preferences: weight: 1 replicaRescheduling: avoidDisruption: true reschedulePolicy: replicaRescheduling: avoidDisruption: true rescheduleWhen: clusterAPIResourcesChanged: false clusterJoined: false clusterLabelsChanged: false policyContentChanged: true schedulingMode: Duplicate schedulingProfile: "" stickyCluster: false

Create the same resource in the host cluster and associate it with the PropagationPolicy

Select the member cluster kubeadmiral-member-1 and perform operations on it.

$ export KUBECONFIG=$HOME/.kube/kubeadmiral/member-1.configRetrieve and save the YAML for Deployment resources in the member cluster.

$ kubectl get deploy my-nginx -oyaml apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"name":"my-nginx","namespace":"default"},"spec":{"replicas":2,"selector":{"matchLabels":{"run":"my-nginx"}},"template":{"metadata":{"labels":{"run":"my-nginx"}},"spec":{"containers":[{"image":"nginx","name":"my-nginx","ports":[{"containerPort":80}]}]}}}} creationTimestamp: "2023-08-30T02:26:57Z" generation: 1 name: my-nginx namespace: default resourceVersion: "898" uid: 5b64f73b-ce6d-4ada-998e-db6f682155f6 spec: progressDeadlineSeconds: 600 replicas: 2 revisionHistoryLimit: 10 selector: matchLabels: run: my-nginx strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: run: my-nginx spec: containers: - image: nginx imagePullPolicy: Always name: my-nginx ports: - containerPort: 80 protocol: TCP resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 2 conditions: - lastTransitionTime: "2023-08-30T02:27:21Z" lastUpdateTime: "2023-08-30T02:27:21Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: "2023-08-30T02:26:57Z" lastUpdateTime: "2023-08-30T02:27:21Z" message: ReplicaSet "my-nginx-5b56ccd65f" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 2 replicas: 2 updatedReplicas: 2Retrieve and save the YAML for Service resources in the member cluster.

$ kubectl get svc my-nginx -oyaml apiVersion: v1 kind: Service metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"run":"my-nginx"},"name":"my-nginx","namespace":"default"},"spec":{"ports":[{"port":80,"protocol":"TCP"}],"selector":{"run":"my-nginx"}}} creationTimestamp: "2023-08-30T02:27:01Z" labels: run: my-nginx name: my-nginx namespace: default resourceVersion: "855" uid: cc06cd52-1a80-4d3c-8fcf-e416d8c3027d spec: clusterIP: 10.96.72.40 clusterIPs: - 10.96.72.40 ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - port: 80 protocol: TCP targetPort: 80 selector: run: my-nginx sessionAffinity: None type: ClusterIP status: loadBalancer: {}Merge the resource YAML and perform pre-processing for federation.(You can refer to the comments in resources.yaml.)

a. Remove the resourceVersion field from the resources.

b. For Service resources, remove the clusterIP and clusterIPs fields.

c. Add a label for the PropagationPolicy.

d. Add an annotation for resource takeover.# ./resources.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: kubeadmiral.io/propagation-policy-name: nginx-pp #Add a label for the PropagationPolicy. annotations: kubeadmiral.io/conflict-resolution: adopt #Add an annotation for resource takeove. deployment.kubernetes.io/revision: "1" kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"name":"my-nginx","namespace":"default"},"spec":{"replicas":2,"selector":{"matchLabels":{"run":"my-nginx"}},"template":{"metadata":{"labels":{"run":"my-nginx"}},"spec":{"containers":[{"image":"nginx","name":"my-nginx","ports":[{"containerPort":80}]}]}}}} creationTimestamp: "2023-08-30T02:26:57Z" generation: 1 name: my-nginx namespace: default #resourceVersion: "898" remove uid: 5b64f73b-ce6d-4ada-998e-db6f682155f6 spec: progressDeadlineSeconds: 600 replicas: 2 revisionHistoryLimit: 10 selector: matchLabels: run: my-nginx strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: run: my-nginx spec: containers: - image: nginx imagePullPolicy: Always name: my-nginx ports: - containerPort: 80 protocol: TCP resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: availableReplicas: 2 conditions: - lastTransitionTime: "2023-08-30T02:27:21Z" lastUpdateTime: "2023-08-30T02:27:21Z" message: Deployment has minimum availability. reason: MinimumReplicasAvailable status: "True" type: Available - lastTransitionTime: "2023-08-30T02:26:57Z" lastUpdateTime: "2023-08-30T02:27:21Z" message: ReplicaSet "my-nginx-5b56ccd65f" has successfully progressed. reason: NewReplicaSetAvailable status: "True" type: Progressing observedGeneration: 1 readyReplicas: 2 replicas: 2 updatedReplicas: 2 --- apiVersion: v1 kind: Service metadata: annotations: kubeadmiral.io/conflict-resolution: adopt #Add an annotation for resource takeove. kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"run":"my-nginx"},"name":"my-nginx","namespace":"default"},"spec":{"ports":[{"port":80,"protocol":"TCP"}],"selector":{"run":"my-nginx"}}} creationTimestamp: "2023-08-30T02:27:01Z" labels: run: my-nginx kubeadmiral.io/propagation-policy-name: nginx-pp #Add a label for the PropagationPolicy. name: my-nginx namespace: default #resourceVersion: "855" remove uid: cc06cd52-1a80-4d3c-8fcf-e416d8c3027d spec: # Remove the clusterIP address, the network segment of the host cluster may conflict with that of the cluster. # clusterIP: 10.96.72.40 # clusterIPs: # - 10.96.72.40 ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - port: 80 protocol: TCP targetPort: 80 selector: run: my-nginx sessionAffinity: None type: ClusterIP status: loadBalancer: {}Select the host cluster.

$ export KUBECONFIG=/Users/bytedance/.kube/kubeadmiral/kubeadmiral.configCreate resources in the host cluster.

$ kubectl apply -f ./resources.yaml deployment.apps/my-nginx created service/my-nginx created

View the results of Resource Federalization

Check the distribution status of host cluster resources, successfully distributed to the member cluster.

$ kubectl get federatedobjects.core.kubeadmiral.io -oyaml apiVersion: v1 items: - apiVersion: core.kubeadmiral.io/v1alpha1 kind: FederatedObject metadata: annotations: federate.controller.kubeadmiral.io/observed-annotations: kubeadmiral.io/conflict-resolution|deployment.kubernetes.io/revision,kubeadmiral.io/latest-replicaset-digests,kubectl.kubernetes.io/last-applied-configuration federate.controller.kubeadmiral.io/observed-labels: kubeadmiral.io/propagation-policy-name| federate.controller.kubeadmiral.io/template-generator-merge-patch: '{"metadata":{"annotations":{"kubeadmiral.io/conflict-resolution":null,"kubeadmiral.io/latest-replicaset-digests":null},"creationTimestamp":null,"finalizers":null,"labels":{"kubeadmiral.io/propagation-policy-name":null},"managedFields":null,"resourceVersion":null,"uid":null},"status":null}' internal.kubeadmiral.io/enable-follower-scheduling: "true" kubeadmiral.io/conflict-resolution: adopt kubeadmiral.io/pending-controllers: '[]' kubeadmiral.io/scheduling-triggers: '{"schedulingAnnotationsHash":"1450640401","replicaCount":2,"resourceRequest":{"millicpu":0,"memory":0,"ephemeralStorage":0,"scalarResources":null},"policyName":"nginx-pp","policyContentHash":"638791993","clusters":["kubeadmiral-member-2","kubeadmiral-member-3","kubeadmiral-member-1"],"clusterLabelsHashes":{"kubeadmiral-member-1":"2342744735","kubeadmiral-member-2":"3001383825","kubeadmiral-member-3":"2901236891"},"clusterTaintsHashes":{"kubeadmiral-member-1":"913756753","kubeadmiral-member-2":"913756753","kubeadmiral-member-3":"913756753"},"clusterAPIResourceTypesHashes":{"kubeadmiral-member-1":"2027866002","kubeadmiral-member-2":"2027866002","kubeadmiral-member-3":"2027866002"}}' creationTimestamp: "2023-08-30T06:48:42Z" finalizers: - kubeadmiral.io/sync-controller generation: 2 labels: apps/v1: Deployment kubeadmiral.io/propagation-policy-name: nginx-pp name: my-nginx-deployments.apps namespace: default ownerReferences: - apiVersion: apps/v1 blockOwnerDeletion: true controller: true kind: Deployment name: my-nginx uid: 8dd32323-b023-479a-8e60-b69a7dc1be28 resourceVersion: "8045" uid: 444c83ec-2a3c-4366-b334-36ee9178df94 spec: placements: - controller: kubeadmiral.io/global-scheduler placement: - cluster: kubeadmiral-member-1 template: apiVersion: apps/v1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: "1" kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{"deployment.kubernetes.io/revision":"1","kubeadmiral.io/conflict-resolution":"adopt"},"creationTimestamp":"2023-08-30T02:26:57Z","generation":1,"labels":{"kubeadmiral.io/propagation-policy-name":"nginx-pp"},"name":"my-nginx","namespace":"default","uid":"5b64f73b-ce6d-4ada-998e-db6f682155f6"},"spec":{"progressDeadlineSeconds":600,"replicas":2,"revisionHistoryLimit":10,"selector":{"matchLabels":{"run":"my-nginx"}},"strategy":{"rollingUpdate":{"maxSurge":"25%","maxUnavailable":"25%"},"type":"RollingUpdate"},"template":{"metadata":{"creationTimestamp":null,"labels":{"run":"my-nginx"}},"spec":{"containers":[{"image":"nginx","imagePullPolicy":"Always","name":"my-nginx","ports":[{"containerPort":80,"protocol":"TCP"}],"resources":{},"terminationMessagePath":"/dev/termination-log","terminationMessagePolicy":"File"}],"dnsPolicy":"ClusterFirst","restartPolicy":"Always","schedulerName":"default-scheduler","securityContext":{},"terminationGracePeriodSeconds":30}}},"status":{"availableReplicas":2,"conditions":[{"lastTransitionTime":"2023-08-30T02:27:21Z","lastUpdateTime":"2023-08-30T02:27:21Z","message":"Deployment has minimum availability.","reason":"MinimumReplicasAvailable","status":"True","type":"Available"},{"lastTransitionTime":"2023-08-30T02:26:57Z","lastUpdateTime":"2023-08-30T02:27:21Z","message":"ReplicaSet \"my-nginx-5b56ccd65f\" has successfully progressed.","reason":"NewReplicaSetAvailable","status":"True","type":"Progressing"}],"observedGeneration":1,"readyReplicas":2,"replicas":2,"updatedReplicas":2}} generation: 1 labels: {} name: my-nginx namespace: default spec: progressDeadlineSeconds: 600 replicas: 2 revisionHistoryLimit: 10 selector: matchLabels: run: my-nginx strategy: rollingUpdate: maxSurge: 25% maxUnavailable: 25% type: RollingUpdate template: metadata: creationTimestamp: null labels: run: my-nginx spec: containers: - image: nginx imagePullPolicy: Always name: my-nginx ports: - containerPort: 80 protocol: TCP resources: {} terminationMessagePath: /dev/termination-log terminationMessagePolicy: File dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {} terminationGracePeriodSeconds: 30 status: clusters: - cluster: kubeadmiral-member-1 lastObservedGeneration: 2 status: OK conditions: - lastTransitionTime: "2023-08-30T06:48:42Z" lastUpdateTime: "2023-08-30T06:48:42Z" status: "True" type: Propagated syncedGeneration: 2 - apiVersion: core.kubeadmiral.io/v1alpha1 kind: FederatedObject metadata: annotations: federate.controller.kubeadmiral.io/observed-annotations: kubeadmiral.io/conflict-resolution|kubectl.kubernetes.io/last-applied-configuration federate.controller.kubeadmiral.io/observed-labels: kubeadmiral.io/propagation-policy-name|run federate.controller.kubeadmiral.io/template-generator-merge-patch: '{"metadata":{"annotations":{"kubeadmiral.io/conflict-resolution":null},"creationTimestamp":null,"finalizers":null,"labels":{"kubeadmiral.io/propagation-policy-name":null},"managedFields":null,"resourceVersion":null,"uid":null},"status":null}' internal.kubeadmiral.io/enable-follower-scheduling: "true" kubeadmiral.io/conflict-resolution: adopt kubeadmiral.io/pending-controllers: '[]' kubeadmiral.io/scheduling-triggers: '{"schedulingAnnotationsHash":"1450640401","replicaCount":0,"resourceRequest":{"millicpu":0,"memory":0,"ephemeralStorage":0,"scalarResources":null},"policyName":"nginx-pp","policyContentHash":"638791993","clusters":["kubeadmiral-member-1","kubeadmiral-member-2","kubeadmiral-member-3"],"clusterLabelsHashes":{"kubeadmiral-member-1":"2342744735","kubeadmiral-member-2":"3001383825","kubeadmiral-member-3":"2901236891"},"clusterTaintsHashes":{"kubeadmiral-member-1":"913756753","kubeadmiral-member-2":"913756753","kubeadmiral-member-3":"913756753"},"clusterAPIResourceTypesHashes":{"kubeadmiral-member-1":"2027866002","kubeadmiral-member-2":"2027866002","kubeadmiral-member-3":"2027866002"}}' creationTimestamp: "2023-08-30T06:48:42Z" finalizers: - kubeadmiral.io/sync-controller generation: 2 labels: kubeadmiral.io/propagation-policy-name: nginx-pp v1: Service name: my-nginx-services namespace: default ownerReferences: - apiVersion: v1 blockOwnerDeletion: true controller: true kind: Service name: my-nginx uid: 6a2a63a2-be82-464b-86b6-0ac4e6c3b69f resourceVersion: "8031" uid: 7c077821-3c7d-4e3b-8523-5b6f2b166e68 spec: placements: - controller: kubeadmiral.io/global-scheduler placement: - cluster: kubeadmiral-member-1 template: apiVersion: v1 kind: Service metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{"kubeadmiral.io/conflict-resolution":"adopt"},"creationTimestamp":"2023-08-30T02:27:01Z","labels":{"kubeadmiral.io/propagation-policy-name":"nginx-pp","run":"my-nginx"},"name":"my-nginx","namespace":"default","uid":"cc06cd52-1a80-4d3c-8fcf-e416d8c3027d"},"spec":{"ipFamilies":["IPv4"],"ipFamilyPolicy":"SingleStack","ports":[{"port":80,"protocol":"TCP","targetPort":80}],"selector":{"run":"my-nginx"},"sessionAffinity":"None","type":"ClusterIP"},"status":{"loadBalancer":{}}} labels: run: my-nginx name: my-nginx namespace: default spec: clusterIP: 10.106.114.20 clusterIPs: - 10.106.114.20 ports: - port: 80 protocol: TCP targetPort: 80 selector: run: my-nginx sessionAffinity: None type: ClusterIP status: clusters: - cluster: kubeadmiral-member-1 status: OK conditions: - lastTransitionTime: "2023-08-30T06:48:42Z" lastUpdateTime: "2023-08-30T06:48:42Z" status: "True" type: Propagated syncedGeneration: 2 kind: List metadata: resourceVersion: ""Select the member cluster kubeadmiral-member-1.

$ export KUBECONFIG=$HOME/.kube/kubeadmiral/member-1.configView the status of pod resources in the member cluster, the restart has not been performed.

$ kubectl get po NAME READY STATUS RESTARTS AGE my-nginx-5b56ccd65f-l7dm5 1/1 Running 0 4h49m my-nginx-5b56ccd65f-ldfp4 1/1 Running 0 4h49m

3.5 - Override Policy

Introduction

OverridePolicy and ClusterOverridePolicy are used to define differentiated configurations when the federated resource is propagated to different clusters. OverridePolicy can only act on namespaced resources, and ClusterOverridePolicy can act on cluster scoped and namespaced resources. The overrides are generally configured using JSONPatch. And in addition, overwriting syntax encapsulated for specified objects (including: Image, Command, Args, Labels, Annotations, etc.) is provided. Common usage scenarios are as follows:

- Configure customized features of different cloud service providers through annotations. For example, for the ingress and service resources of different cloud service providers, differentiated strategies can be used to enable LB of different specifications and corresponding load balancing policy configurations through annotations.

- Independently adjust the number of replicas of an application in different clusters. For example: the number of replicas declared by the my-nginx application is 3. You can use the OverridePolicy to force the specified resources to be propagated to the cluster: the number of replicas of Cluster A is 1, the number of replicas of Cluster B is 5, and the number of replicas of Cluster C is 7.

- Independently adjust container images applied in different clusters. For example: when an application is distributed to a private cluster and a public cloud cluster, OverridePolicy can be used to independently configure the address to be pulled by the container image.

- Adjust some configurations of the cluster in the application. For example: before the application is applied to cluster Cluster A, a OverridePolicy can be used to inject a sidecar container.

- Configure cluster information for resource instances distributed to a cluster, for example:

apps.my.company/running-in: cluster-01. - Publish changes to specified cluster resources. For example: when encountering situations such as major promotions, sudden traffic, emergency expansion, etc., and you need to make changes to the application, you can gradually release your changes to the designated clusters to reduce the risk scope; you can also delete the OverridePolicy or disassociate the OverridePolicy from the resources to roll back to the state before the change.

About OverridePolicy and ClusterOverridePolicy

Except for the difference in kind, the structures of OverridePolicy and ClusterOverridePolicy are exactly the same. A resource supports associating to a maximum of 1 OverridePolicy and 1 ClusterOverridePolicy, which are specified through the labels kubeadmiral.io/override-policy-name and kubeadmiral.io/cluster-override-policy-name respectively. If a namespace scoped resource has associated to both OverridePolicy and ClusterOverridePolicy, ClusterOverridePolicy and OverridePolicy will take effect at the same time and the order of effect is first ClusterOverridePolicy and then OverridePolicy; and if a cluster scoped resource has associated to both OverridePolicy and ClusterOverridePolicy, only ClusterOverridePolicy will take effect.

The way to use them are as follows:

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 labels:

5 app: my-dep

6 kubeadmiral.io/cluster-override-policy-name: my-cop # Overwrite this Deployment via ClusterOverridePolicy.

7 kubeadmiral.io/override-policy-name: my-op # Overwrite this Deployment via OverridePolicy.

8 kubeadmiral.io/propagation-policy-name: my-pp # Propagate this Deployment via PropagationPolicy.

9 name: my-dep

10 namespace: default

11...

12---

13apiVersion: rbac.authorization.k8s.io/v1

14kind: ClusterRole

15metadata:

16 labels:

17 kubeadmiral.io/cluster-override-policy-name: my-cop # Overwrite this ClusterRole via ClusterOverridePolicy.

18 kubeadmiral.io/cluster-propagation-policy-name: my-cpp # Propagate this ClusterRole via ClusterPropagationPolicy.

19 name: pod-reader

20...

Writing OverridePolicy

The OverridePolicy supports configuring multiple override rules within one policy. And it supports multiple semantics within one rule to help users select one or more target clusters, including: clusters, clusterSelector, and clusterAffinity. And within one rule, also supports configuring multiple override operations.

A typical OverridePolicy looks like this:

1apiVersion: core.kubeadmiral.io/v1alpha1

2kind: OverridePolicy

3metadata:

4 name: mypolicy

5 namespace: default

6spec:

7 overrideRules:

8 - targetClusters:

9 clusters:

10 - Cluster-01 # Modify the selected cluster to propagate the resource.

11 - Cluster-02 # Modify the selected cluster to propagate the resource.

12 #clusterSelector:

13 #region: beijing

14 #az: zone1

15 #clusterAffinity:

16 #- matchExpressions:

17 #- key: region

18 #operator: In

19 #values:

20 #- beijing

21 #- key: provider

22 #operator: In

23 #values:

24 #- my-provider

25 overriders:

26 jsonpatch:

27 - path: /spec/template/spec/containers/0/image

28 operator: replace

29 value: nginx:test

30 - path: /metadata/labels/hello

31 operator: add

32 value: world

33 - path: /metadata/labels/foo

34 operator: remove

TargetClusters

targetClusters is used to help users select the target cluster for overwriting. It includes three optional cluster selection methods:

clusters: This is a cluster list. This value explicitly enumerates the list of clusters in which this override rule should take effect. That is, only resources scheduled to member clusters in this list will take effect in this override rule.clusterSelector: Match clusters by labels in the form of key-value pairs. If a resource is scheduled to a member cluster whoseclusterSelectormatches the label, this override rule will take effect in this member cluster.clusterAffinity: Match clusters by affinity configurations of cluster labels. If a resource is scheduled to a member cluster that matchesclusterAffinity, this override rule will take effect in this member cluster. It likes node affinity of Pod, you can see more detail from here: Affinity and anti-affinity.

The three selectors above are all optional. If multiple selectors are configured at the same time, the effective rules are as follows:

- If

clusterSelectoris used, the target member cluster must match all labels. - If

clusterAffinityare used, member clusters only need to satisfy any one of thematchExpressions, but each label selectors inmatchExpressionsmust be matched at the same time. - If any two or three selectors are used at the same time, the target member cluster needs to meet every selector at the same time for the overwrite rule to take effect.

- If none of the three selection methods of targetClusters is selected, that is: clusters is empty or has a length of 0, and the contents of clusterSelector and clusterAffinity are both empty, it means matching all clusters.

Overriders

overriders indicates the overriding rules to be applied to the selected target cluster. Currently, it supports JSONPatch and encapsulated overwriting syntax for specified objects(including: Image, Command, Args, Labels, Annotations, etc.).

JSONPatch

The value of JSONPatch is a list of patches, specifies overriders in a syntax similar to RFC6902 JSON Patch. Each patch needs to contain:

path: Indicates the path of the target overwritten field.operator: Indicates supported operations, including: add, remove, replace.add: Append or insert one or more elements to the resource.remove: Remove one or more elements from the resource.replace: Replace one or more elements in a resource.

value: Indicates the value of the target overwrite field. It is required when the operator is add or replace. It does not need to be filled in when the operator is remove.

Note:

- If you need to refer to a key with

~or/in its name, you must escape the characters with~0and~1respectively. For example, to get “baz” from{ "foo/bar~": "baz" }you’d use the pointer/foo~1bar~0. - If you need to refer to the end of an array you can use - instead of an index. For example, to refer to the end of the array of biscuits above you would use /biscuits/-. This is useful when you need to insert a value at the end of an array.

- For more detail about JSONPatch: https://jsonpatch.com 。

Image

image means overwriting various fields of container image. The container image address consists of: [Registry '/'] Repository [ ":" Tag ] [ "@" Digest ]. The overwriting syntax parameters involved are as follows:

- containerNames:

containerNamesare ignored whenimagePathis set. If empty, the image override rule applies to all containers. Otherwise, this override targets the specified container(s) or init container(s) in the pod template. - imagePath:

imagePathrepresents the image path of the target. For example:/spec/template/spec/containers/0/image. If empty, the system will automatically resolve the image path when the resource type is Pod, CronJob, Deployment, StatefulSet, DaemonSet or Job. - operations: Indicates the operation method to be performed on the target.

- imageComponent: required, indicating which component of the image address to be operated on. Optional values are as follows.

- Registry: The address of the registry where the image is located.

- Repository: Image name.

- Tag: Image version number.

- Digest: Image identifier.

- operator: operator specifies the operation, optional values are as follows:

addIfAbsent,overwrite,delete. If empty, the default behavior isoverwrite. - value: The value required for the operation. For

addIfAbsent,overwritevalue cannot be empty.

- imageComponent: required, indicating which component of the image address to be operated on. Optional values are as follows.

Example:

1apiVersion: core.kubeadmiral.io/v1alpha1

2kind: ClusterOverridePolicy

3metadata:

4 name: mypolicy

5spec:

6 overrideRules:

7 - targetClusters:

8 clusters:

9 - kubeadmiral-member-1

10 overriders:

11 image:

12 - containerNames:

13 - "server-1"

14 - "server-2"

15 operations:

16 - imageComponent: Registry

17 operator: addIfAbsent

18 value: cluster.io

19 - targetClusters:

20 clusters:

21 - kubeadmiral-member-2

22 overriders:

23 image:

24 - imagePath: "/spec/templates/0/container/image"

25 operations:

26 - imageComponent: Registry

27 operator: addIfAbsent

28 value: cluster.io

29 - imageComponent: Repository

30 operator: overwrite

31 value: "over/echo-server"

32 - imageComponent: Tag

33 operator: delete

34 - imageComponent: Digest

35 operator: addIfAbsent

36 value: "sha256:aaaaf56b44807c64d294e6c8059b479f35350b454492398225034174808d1726"

Command and Args

command and args represent overwriting the command and args fields of the pod template. The overwriting syntax parameters involved are as follows:

- containerName: Required, declares that this override will target the specified container or init container in the pod template.

- operator: operator specifies the operation, optional values are as follows:

append,overwrite,delete. If empty, the default behavior isoverwrite. - value: String array of command/args that will be applied to containerName.

- If operator is

append, the items in value (empty is not allowed) are appended to command / args. - If operator is

overwrite, containerName’s current command / args will be completely replaced by value. - If operator is

delete, items in value that match command / args will be deleted.

- If operator is

Examples:

1apiVersion: core.kubeadmiral.io/v1alpha1

2kind: ClusterOverridePolicy

3metadata:

4 name: mypolicy

5spec:

6 overrideRules:

7 - targetClusters:

8 clusters:

9 - kubeadmiral-member-1

10 overriders:

11 command:

12 - containerName: "server-1"

13 operator: append

14 value:

15 - "/bin/sh"

16 - "-c"

17 - "sleep 10s"

18 - containerName: "server-2"

19 operator: overwrite

20 value:

21 - "/bin/sh"

22 - "-c"

23 - "sleep 10s"

24 - containerName: "server-3"

25 operator: delete

26 value:

27 - "sleep 10s"

28 - targetClusters:

29 clusters:

30 - kubeadmiral-member-2

31 overriders:

32 args:

33 - containerName: "server-1"

34 operator: append

35 value:

36 - "-v=4"

37 - "--enable-profiling"

Labels and Annotations

labels and annotations represent overwriting the labels and annotations fields of Kubernetes resources. The overwriting syntax parameters involved are as follows:

- operator: operator specifies the operation, optional values are as follows:

addIfAbsent,overwrite,delete. If empty, the default behavior isoverwrite. - value: the map that will be applied to resource labels / annotations.

- If operator is

addIfAbsent, the items in value (empty is not allowed) will be added to labels / annotations.- For the

addIfAbsentoperator, keys in value cannot conflict with labels / annotations.

- For the

- If operator is

overwrite, items in value that match labels / annotations will be replaced. - If operator is

delete, items in value that match labels / annotations will be deleted.

- If operator is

Examples:

1apiVersion: core.kubeadmiral.io/v1alpha1

2kind: ClusterOverridePolicy

3metadata:

4 name: mypolicy

5spec:

6 overrideRules:

7 - targetClusters:

8 clusters:

9 - kubeadmiral-member-1

10 overriders:

11 labels:

12 - operator: addIfAbsent

13 value:

14 app: "chat"

15 - operator: overwrite

16 value:

17 version: "v1.1.0"

18 - operator: delete

19 value:

20 action: ""

21 - targetClusters:

22 clusters:

23 - kubeadmiral-member-2

24 overriders:

25 annotations:

26 - operator: addIfAbsent

27 value:

28 app: "chat"

29 - operator: overwrite

30 value:

31 version: "v1.1.0"

32 - operator: delete

33 value:

34 action: ""

Order of effect

Multiple overrideRules are overridden in the order of declaration, and the later override rules have higher priority.

Override rules within the same overrideRules are executed in the following order:

- Image

- Command

- Args

- Annotations

- Labels

- JSONPatch

So, JSONPatch has the highest overwriting priority.

Multiple operators within the same overrider are executed in the order of declaration, and the later operators have higher priority.

4 - Reference

5 - Contributing to KubeAdmiral

Reporting security issues

If you believe you have found a security vulnerability in KubeAdmiral, please read our security policy for more details .

Reporting General Issues

Any user is welcome be a contributor. If you have any feedback for the project, feel free to open an issue.

To make communication more efficient, we suggest that you try to confirm whether someone else has reported the issue before submitting a new one. If you find it exists, please attach your detailed information in the issue comments.

Read more about how to categorize general issues.

How to Contribute Code and Documentation

Contributing code and documentation requires learning how to submit pull requests on Github. It’s not difficult to follow the following manuals: